Module 5: Implementation, Monitoring and Evaluation

Module Introduction

At this point in the process, the planning group has identified and selected the evidence-based interventions to include in your state plan. Module 5 focuses on the next step in the planning process: preparing for implementing, monitoring, and evaluating these interventions. These tasks are accomplished with the use of logic models, implementation plans, and evaluation plans. These tools help you and your partners work through the tasks associated with making interventions a reality and measuring progress toward achieving goals. To follow the gardening analogy, this is the point when you get ready to plant by preparing the soil and gathering your tools.

Module Title |

Topics Covered |

Gardening Analogy |

|---|---|---|

Module 1: |

An Introduction to Public Health Planning |

|

Module 2: |

Working Collaboratively with Partners, Pre-Planning and Launching the Planning Process with an Initial Meeting |

|

Module 3: |

Presenting the Data and Defining the Problem |

|

Module 4: |

Finding Solutions to the Problem |

|

Module 5: |

Preparing to implement solutions |

|

Module 5 Learning Objectives:

Upon completion of this module, you should be able to:

- Describe the purpose and key components of a logic model

- Describe the purpose and core components of an implementation plan

- Define the purpose of and steps to creating an evaluation plan

- Differentiate between process and outcome evaluation and performance monitoring

- Describe what indicators are and how to develop them

- Summarize steps involved in developing logic models, implementation plans, and evaluation plans.

Time estimate for completion:

It should take approximately 75 minutes to complete this module.

Section I: Using Logic Models in Planning for Implementation, Monitoring, & Evaluation

Logic Model Definition and Purpose

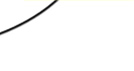

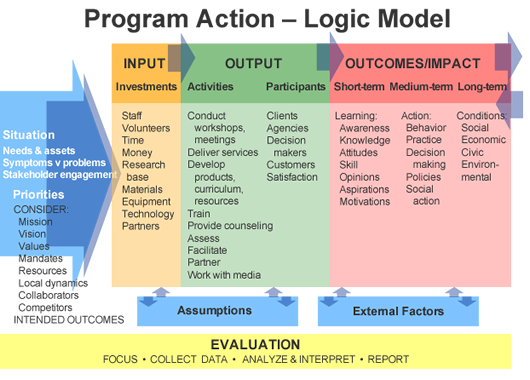

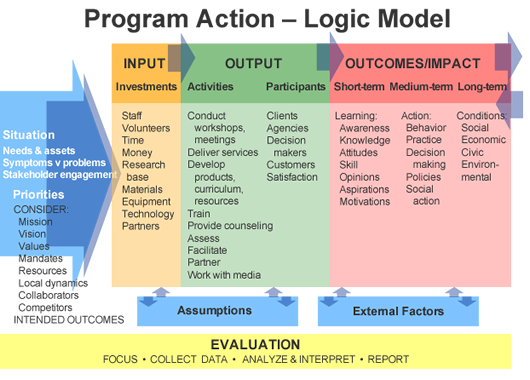

Logic models are tools that provide a simplified picture of a program, initiative, or intervention. They are used in planning program implementation, monitoring, and evaluation. A logic model links intervention components in a chain of reasoning that shows relationships among the resources invested, activities that take place, and the changes that occur as a result. Logic models demonstrate why doing an activity will result in a specific outcome.

Logic models are the starting point for developing both implementation and evaluation plans. They connect the work being performed with the results or outcomes you are trying to achieve. You and your partners should develop a logic model for each major intervention you intend to implement.

Working together on logic models produces a shared understanding and perspective on the problem, the intervention, and the intended outcomes. Using a group process keeps everyone focused on the work to be performed and on measuring the results.

While there are many variations on logic models, the basic components are the same. In this course, we will use the logic model seen below, developed by the University of Wisconsin Extension Service.

Click here to download a blank version of this Logic Model template.

Section I: Using Logic Models in Planning for Implementation, Monitoring, & Evaluation cont'd

Inputs, Outputs, and Outcomes

The main part of the logic model is comprised of the Inputs, Outputs, and Outcomes.

Inputs are the resources, contributions, and investments that go into the program. This includes staff, volunteers, partners, funding, expertise, equipment, technology, materials, site, time, CDC technical assistance, etc.

Inputs are the resources, contributions, and investments that go into the program. This includes staff, volunteers, partners, funding, expertise, equipment, technology, materials, site, time, CDC technical assistance, etc.

Outputs are the activities, services, events and products that reach people who participate or who are targeted.

Outputs are the activities, services, events and products that reach people who participate or who are targeted.

- Activities are the action elements of the intervention.

- Participants are the target audiences for each activity.

Outcomes are the results or changes for individuals, groups, communities, organizations, communities, or systems. Outcomes tell us what difference the program makes and occur along a path from shorter-term achievements to longer-term achievements, or impact.

Outcomes are the results or changes for individuals, groups, communities, organizations, communities, or systems. Outcomes tell us what difference the program makes and occur along a path from shorter-term achievements to longer-term achievements, or impact.

- Short-Term Outcomes typically include changes in knowledge, skills, beliefs, and attitudes. They are immediately measureable at the completion of the intervention.

- Medium-Term Outcomes include changes in actions or behavior. They are generally measurable six months to one year after completion of the intervention.

- Long-Term Outcomes refer to the ultimate benefit of the intervention, such as change in disease incidence or prevalence. The time period associated with these outcomes varies by how long it takes to detect measurable changes.

Section I: Using Logic Models in Planning for Implementation, Monitoring, & Evaluation cont'd

Situation, Priorities, Assumptions, and External Factors

The logic model presented here also contains boxes outside the main diagram.

Situation refers to the problem being addressed and the factors surrounding it. At this stage in the process, you and your partners have already used data to identify the situation and contributing factors (see Module 3).

Situation refers to the problem being addressed and the factors surrounding it. At this stage in the process, you and your partners have already used data to identify the situation and contributing factors (see Module 3).

Priorities are selected after analysis of the problem, potential solutions, and available resources. Your priorities have been selected to reflect the segment of the population, the intervention point (from the population flow map), and the aspect of the problem you are addressing (see Module 4).

![]() Assumptions are the beliefs we have about the program, the people involved, the context, and the way we think the program will work. Assumptions influence the program decisions we make, so it is important to consider what assumptions are being made and ensure that there is evidence to support the actions laid out in the intervention.

Assumptions are the beliefs we have about the program, the people involved, the context, and the way we think the program will work. Assumptions influence the program decisions we make, so it is important to consider what assumptions are being made and ensure that there is evidence to support the actions laid out in the intervention.

![]() External Factors are environmental factors that interact with and influence the program’s successes. This could include political climate, culture, economy, social determinants, etc. You will need to assess how external factors are likely to influence the program's ability to achieve expected results and determine what can be changed or mitigated through risk management and contingency plans, if necessary.

External Factors are environmental factors that interact with and influence the program’s successes. This could include political climate, culture, economy, social determinants, etc. You will need to assess how external factors are likely to influence the program's ability to achieve expected results and determine what can be changed or mitigated through risk management and contingency plans, if necessary.

Section I: Using Logic Models in Planning for Implementation, Monitoring, & Evaluation cont'd

Linking Logic Model Components

In all logic model formats, arrows are used to link and show sequences among components. The placement of arrows conveys the logic of the program and answers the questions about which activities produce which outputs and outcomes. For example:

- Vertical Arrows drawn between objects within a column indicate a temporal sequence of activities.

- Horizontal Arrows drawn between objects across columns indicate that one leads to the other.

Many logic models are linear, showing arrows only going horizontally in one direction linking one object to the next. This is not always the case, though. Arrows can go in both directions, and multiple activities can lead to a single outcome, or a single activity can lead to several outcomes.

Section I: Using Logic Models in Planning for Implementation, Monitoring, & Evaluation cont'd

Building Logic Models Using “If…then” Statements

Logic Model Tutorial

For more information on logic model development, visit the online tutorial available through the University of Wisconsin Extension Service Program Development and Evaluation website: http://www.uwex.edu/

ces/lmcourse/.

There are two basic ways to build a logic model. You can start with the problem statement and work toward the outcomes (forward, left to right), or you can start with the long-term outcome and work to the problem statement (backwards, right to left). Working from the problem to the solution may be easier, and it also makes it easier to develop the accompanying implementation plan.

Logic models can be constructed by working through a series of “If ___, then ___” statements, in which the blanks are filled in with an action and a result. For example:

“If people can manage their diabetes, then rates of serious complications of diabetes would decrease.”

“If, then” statements focus on the changes that will occur if the proposed intervention occurs. Laying out the problem statement in combination with the activities in the form of “If, then” statements will produce both the outputs (activities, participants, products) and the short, intermediate, and long-term outcomes. These statements also serve as a way to check that the link between each step from the problem to the solution makes sense.

Section I: Using Logic Models in Planning for Implementation, Monitoring, & Evaluation cont'd

Relationship Between Logic Models, Implementation Plans, and Evaluation Plans

The next two sections of this module discuss Implementation and Evaluation Plans. The left side of the logic model represents the core information contained in your Implementation Plan and what you will measure in your performance monitoring system. The right side of the logic model represents the results or outcomes expected from the intervention. The right side of the logic model provides key outcome evaluation questions addressed in your evaluation plan.

Beginning work on implementation and evaluation plans during the planning phase, rather than waiting until the plan document is completed, brings up issues for discussion and decision-making such as resources needed, organizations responsible, timelines, potential scheduling conflicts, and other practical matters. It also creates the expectation that implementation will flow directly from the planning phase without delay.Section I Summary & Activity

Section I introduced logic models, including their purpose and main components. To ensure that you have a good understanding of logic models before we move forward, complete the following activity:

- Locate 1-2 sample logic models from existing programs that you and your partners are working on or have worked on in the past, and review them.

- Do the sample logic models adequately convey the logic of the program and demonstrate which activities produce which outputs and outcomes?

- What are some of the benefits of working on developing a logic model for your selected interventions with coalition partners?

Section II: Implementation Plans

Implementation Plan Definition and Purpose

Implementation Plans (also called work plans or action plans) are tools that break down larger projects into all of the tasks or activities necessary to put them into action. These tasks and activities are assigned due dates and a person or organization responsible for completing them. Implementation plans make it clear:

- What needs to be done

- Who is responsible for doing what

- What resources are needed from whom

- How long it will take to complete the task or activity

- The current status of the intervention

This information is particularly helpful when multiple partners from different organizations will be working together on implementing the selected interventions. The implementation plan can be used as an active project management tool to organize and track project progress.

Section II: Implementation Plans cont'd

Implementation Plan Core Elements

To be useful project management tools, all implementation plans require the same necessary core elements described in the table below.

Core Elements of an Implementation Plan |

|

|---|---|

Tasks |

Each strategy or intervention is broken down into all of the subsidiary tasks or activities needed to accomplish it. |

Person Responsible |

Each task or activity is assigned to a person or organization (or the lead agency and supporting partners) responsible for completing it. |

Timeframe |

Each task is assigned a timeframe in which it will be performed, including a beginning and end date. (If the work continues throughout the project period, it can be labeled as ongoing.) |

Status/Progress Measure |

The current status of each task is identified and should be updated at regular intervals in order to monitor the work being done. Examples of status include: not yet begun, in progress, completed (with or without a percentage indicator), delayed, cancelled, changed, etc. |

Section II: Implementation Plans cont'd

Implementation Plan Optional Elements

Implementation plans may also contain optional elements that provide additional information for each task, as described in the table below.

Optional Elements in an Implementation Plan |

|

|---|---|

Performance Measures |

Predetermined performance measures gauge how well a task was executed. |

Notes |

Information related to the status can be entered here to serve as an explanation regarding why expected progress has not been achieved or if the task has been completed ahead of schedule. |

Resources Required/ Budget |

Lists the major resources or funds needed to complete the task. |

Results/ Expected Outcome |

Describes the expected results of the task being accomplished, typically short-term or intermediate outcomes that occur within the timeframe of the implementation plan. |

Products |

Lists anything to be created or developed as part of the task. |

Section II: Implementation Plans cont'd

Creating Implementation Plans

You will need to prepare separate implementation plans for each intervention. To begin creating the implementation plans, you will use the information already prepared as part of each intervention’s logic model. The left side of the logic model – inputs and outputs - represents the core information that must be included in each implementation plan.

There are many different approaches to designing implementation plans, and there are software programs (such as Microsoft Project) that can be used to generate them. Click here to download a standard implementation plan template.

Section II: Implementation Plans cont'd

Implementation Plan Organization

Each intervention can be viewed as going through a series of phases over time:

- Pre-Implementation: Planning and Development

- Implementation: Roll-out

- Implementation: Maintenance

- Expansion (to new sites)

- Dissemination (with new partners)

As a result, implementation plans are frequently organized chronologically to correspond to different phases of the implementation process. They are reviewed and updated as each phase is completed, and adjustments are made as needed in implementation plan sections for upcoming phases. Click here for a table that describes tasks associated with each of these chronological phases of implementation.

Section II: Implementation Plans cont'd

Types of Implementation Plans

There are a number of different types of implementation plans, each of which focuses on a particular portion of the implementation process. Types of implementation plans include:

- Training and education plans

- Communication and marketing plans

- Policy and advocacy plans

Click here for a table that compares these other types of implementation plans and provides tasks and chronological phases specific to each. A standard implementation plan template can be adapted for use with these other plan types.

Section II: Implementation Plans cont'd

Multiple Organization Implementation Plans

Implementation plans are used by project managers within organizations to determine the allocation of work among employees, critical paths and timelines for activities, and resources required to accomplish the project by the deadline. Implementation plans developed by multiple organizations working together make it clear which organization is responsible for supplying different resources, implementing different activities, and on what schedule the intervention will be implemented.

The multiple organization implementation plan serves as a written charter that provides much more detail than a Memorandum of Understanding. Each participant should bring the proposed implementation plan back to their respective organizational leadership for review and approval. State Department of Health staff also should review the different implementation plans to determine whether any gaps exist, any organizations are overcommitted, or schedules need to be adjusted to allow for the completion of one implementation plan’s activities necessary for the next set to begin. Review of implementation plans also enables organizations to take advantage of economies of scale and collaboration.Section II Summary & Activity

The preceding section described implementation plans and their major components. Now, consider how these concepts will apply to your coalition. Watch the video below, in which Florida and Arkansas Diabetes Prevention and Control Program (DPCP) staff discuss implementation plans. Then, answer the questions that follow. Click here for a worksheet to record your answers.

- How is an implementation plan, or action plan, different from the state plan?

- How can preparing the implementation plan for different strategies/interventions create a sense of buy-in on the part of coalition members and partners?

The next step is to plan out how to evaluate your efforts.

Section III: Planning for Evaluation

Evaluation Plans

Evaluation plans do not have to include a list of activities, assigned personnel, and timelines related to the evaluation. However, this information should be captured as part of the implementation plan.

Evaluation involves the collection of information about the activities and outcomes of programs to make determinations about or improve program effectiveness. Systematic evaluation efforts are essential to improving program effectiveness and guiding decision-making, and a strong evaluation plan is needed to ensure that evaluation efforts are successful.

Evaluation plans are organized around a set of evaluation questions about program implementation and outcomes. The plan lays out all of the required components to conduct an evaluation, and clarifies what needs to be done to assess processes and outcomes and how you intend to use the results.

In this next section, we will define key terms related to evaluation, review different types of evaluation, and discuss the core components of evaluation and monitoring plans. Throughout this section, we will reference the Evaluation Plan Workbook and the associated Evaluation Plan Worksheets, which contain information to consider and steps to complete while developing evaluation plans. It may be useful to have these documents in front of you as you complete this section of Module 5.

Section III: Planning for Evaluation cont'd

The CDC Framework for Program Evaluation

RE-AIM is another commonly used evaluation framework that addresses the effectiveness of your efforts to expand reach and scale of your interventions. Click here to download an overview of the RE-AIM framework.

The approach presented in this module follows the CDC’s Framework for Program Evaluation. The framework provides a systematic way to approach the evaluation process using a set of six steps and four standards, as shown in the diagram below. At this point, it is helpful to consider where you are in this framework.

For example, by assembling your planning group, you have completed the first stage of “Engaging Stakeholders.” By creating intervention workgroups to build logic models and implementation plans together, you have completed the second stage of “Describing the Program.” Creating evaluation plans falls under the “Focusing the Evaluation Design” step. Each workgroup will create at least one evaluation plan for an intervention that also has a logic model and implementation plan. To guide you through the development of evaluation plans, please consult the Evaluation Plan Workbook.

Hover your mouse over each step for more information.

Section III: Planning for Evaluation cont'd

Developing Evaluation Questions

The first step in focusing the evaluation design is to develop evaluation questions. Evaluation questions create the focal point from which data collection, analysis, and reporting flow. Good evaluation questions:

- Yield key information on performance, reach, and outcomes

- Address the concerns of stakeholders, funders, and partners

- Can feasibly be answered

- Connect to components in your logic model and implementation plans

- Address both how the intervention was implemented (process) and what results were achieved (outcome)

If you don’t ask questions, you lose the opportunity to collect data and gather important information. Since time and resoources for evaluation are usually limited, prioritize the evaluation questions and assess their value by asking, “How will we use this information? Will this information help us improve the intervention? Will this information help us achieve the outcomes we want?”

Section III: Planning for Evaluation cont'd

Process Evaluation

Process Evaluation focuses on intervention activities and outputs. Process evaluation questions focus on how the intervention actually was implemented compared to the initial plan. The answers to process evaluation questions offer feedback for program improvement. The information gathered can be used to:

- Keep an intervention on track

- Provide a form of accountability to partners

- Correct implementation problems as they arise

- Explain how certain results are achieved

- Replicate the intervention

These questions come directly from your implementation plan and logic model activities and outputs. The answers to the questions come through monitoring systems put in place to regularly collect implementation data.

Use the Evaluation Plan Workbook Section 3A: Focus the Evaluation – Evaluation Questions, Process Evaluation portion on page 3 to help develop process evaluation questions.Section III: Planning for Evaluation cont'd

Process Evaluation Factors

Click here for this table in PDF format.

Factor |

Sample Questions |

|---|---|

Participation |

|

Content |

|

Fidelity |

|

Timeliness / |

|

Efficiency |

|

Quality |

|

Satisfaction |

|

Context |

|

Section III: Planning for Evaluation cont'd

Outcome Evaluation

Outcome Evaluation focuses on the results, or outcomes, of the intervention. The outcomes reflect the amount of change that has occurred from before the intervention started (baseline) to after the intervention is completed, and potentially beyond that. Outcome evaluation is used to describe the results that were achieved that justify the investment of time, energy, and resources in the intervention. Since it can take many years to demonstrate long-term outcomes, many programs focus on short-term and intermediate outcomes.

Outcome evaluation questions can be drawn from the right side of your logic model, which lists the various expected outcomes of the intervention, separated by timeframe. The changes that can be expected for each time point are as follows:

- Short-term outcome questions focus on changes in knowledge, skills, beliefs, and attitudes.

- Intermediate (or Medium-term) outcome questions focus onsustained behavior changes.

- Long-term outcome questions focus onchanges in the environment (e.g. policies or social norms) or health status (e.g. morbidity and mortality).

Use the Evaluation Plan Workbook’s Section 3A: Focus the Evaluation – Evaluation Questions, Outcome Evaluation portion on page 4 to help in developing outcome evaluation questions.

Section III: Planning for Evaluation cont'd

A Note on Performance Monitoring

Performance monitoring is the systematic collection and analysis of information during the implementation of an intervention over time. Monitoring refers to routine, ongoing data collection at regularly-scheduled intervals on a series of pre-determined measures. The data collected are pre-determined reach, participation, or completion numbers, rates, or time periods.

Performance monitoring is used to improve the efficiency and fidelity of implementation. It lets program managers know if implementation is on track. Monitoring also assures funders that activities are being implemented according to schedule to sufficient numbers of the target audience to achieve impact.

Performance monitoring provides a basis for process evaluation.Section III: Planning for Evaluation cont'd

Indicators in Evaluation Planning

Once your evaluation questions are in place, you’ll need to determine what information is necessary to answer them. These pieces of information are called indicators - the observable, measurable signs that something has been completed or achieved. Indicators are written as short phrases that describe both what is being measured and the unit of measure. You will need indicators for both your process and outcome evaluation questions.

Process evaluation indicators reflect the intervention activities, outputs, and products. They measure timeliness, quality, consistency, costs, and reach, enabling you to monitor progress and determine whether the intervention is on target to reach its outputs. Examples include the number of sessions held or number of volunteers recruited.

Outcome evaluation indicators measure the results or changes that occur from the intervention. Examples include the percentage of adults in the state with a particular disease or the health care expenditures generated by patients in the target population.

Section III: Planning for Evaluation cont'd

Developing Indicators

Whenever possible, you should utilize indicators that have already been developed for the evidence-based intervention in question. If you must create new indicators, use your intervention logic models to guide you. Think about how you will be able to tell whether each activity, output, and outcome has been completed or achieved, and how you will keep track of that information.

Below is a list of characteristics of good indicators. Click here for a PDF of this list. Use the Evaluation Plan Workbook’s Section 3B: Focus the Evaluation – Indicators on page 5 to help in developing indicators. You can also explore the Health Indicators Warehouse for examples of indicators used for a wide variety of health topics at http://healthindicators.gov/.

Characteristics of Good Indicators

Good indicators are:

- Meaningful: Represent important information about the program for stakeholders.

- Relevant: Reflect the intervention’s intended activities, outputs, and outcomes.

- Direct: Closely measure the intended change.

- Objective: Have a clear operational definition of what is being measured and what data need to be collected.

- Reliable: Consistently measured across time and different data collectors.

- Useful: Can be used for program improvement and to demonstrate program outcomes.

- Adequate: Can measure change over time and progress toward performance or outcomes.

- Understandable: Easy to comprehend and interpret.

- Practical/feasible: The data for the indicator should not be too burdensome to collect. The indicator should be reasonable in terms of the data collection cost, frequency, and timeliness for inclusion in the decision-making process.

Section III: Planning for Evaluation cont'd

Evaluation Design

Your indicators tell you what data you need to answer your evaluation questions, but you also need to identify how you will collect the data. Your evaluation design outlines the type of data you want to get, where you will get data (sources), and the how you will collect, manage, analyze, and interpret data (methods).

Data Type: The data collected may be of a quantitative (numeric) or qualitative (descriptive) nature.

Data Sources: Data may come from existing sources, such as agency records or patient files, or from new sources collected by you and your partners, such as through surveys or direct observation.

Methods: When deciding upon which data collection methods to use, consider which methods are:

- Most likely to secure the information you need to answer your evaluation questions

- Most appropriate given the cultural values, understanding, and capacities of those being asked to provide the information

- Most effective given your evaluation and data collection capacity

- Least disruptive to program implementation and participants

- Least costly

Section III: Planning for Evaluation cont'd

Considerations for Designing the Evaluation

When planning the evaluation design, you will need to determine:

- What the sample size is: Data can be collected from the entire participating population, or a sample of this population, depending on the evaluation purpose, the size of the population, and the methodology used.

- Which instruments will be used: You will need to decide what forms or devices will be used to collect and compile information. Whenever possible, it is best to use instruments that were developed and tested for the intervention you are using.

- Who will collect the data: You’ll need to have a plan for who will collect the data and how to train them to collect it. Potential options include an external evaluator, intervention implementers, or the participants themselves.

- When data will be collected (frequency): Data collection can occur at discrete intervals or continuously, depending on the methods used and resources available.

Section III: Planning for Evaluation cont'd

Evaluation Data Compilation and Analysis

Your evaluation plan also will need to include information on how to compile and analyze the data.

Compiling Data: The data first need to be entered into a format that allows for aggregation and analysis (e.g. by entering data into a spreadsheet or database). Protocols for data entry and data cleaning should guide this work to ensure data are entered consistently and errors are identified and corrected.

Analyzing Data: The techniques used to analyze the data depend on the type of data collected and the design of your evaluation, but you will generally include both quantitative and qualitative analysis:

- Quantitative data analysis is the statistical analysis of numerical data. The statistical methods used are dictated by the number of participants and the evaluation design. You will need to provide descriptive statistics on the basic characteristics of participants, the intervention, and the outcomes achieved regardless of evaluation design.

- Qualitative data analysis is the content analysis of non-numerical descriptive data. This entails a close reading and coding of text or transcribed interviews, grouping text extracts by themes, and finding relationships or clusters.

Section III: Planning for Evaluation cont'd

Interpret Evaluation Data

In addition to analyzing the data, you’ll need to have plans in place to interpret the information. When interpreting the data, you are applying meaning to the analyzed data by putting the results in context and forming conclusions. For evaluations, these conclusions are comparative – you are comparing your results to the baseline data, the desired outcomes, or results obtained elsewhere (e.g. literature). Because the same information can be understood in different ways, you should plan to engage and solicit feedback from multiple stakeholders when interpreting the data.

Use the Evaluation Plan Workbook Section 5: Analyze & Interpret Evaluation Findings on pages 11 and 12 as a guide to developing a plan to analyze and interpret evaluation data.Section III: Planning for Evaluation cont'd

Share and Apply Evaluation Findings

You should also plan to share your results with funders, partners, and other stakeholders. A good way to communicate the results is through a comprehensive evaluation report. Other communication formats, like summaries and presentations should also be developed to share your results with other interested parties, such as policymakers, advocacy organizations, and the public.

Finally, applying the findings is an important part of the continuous cycle of program implementation. Once the evaluation results have been shared with partners, the implementation and evaluation plans can be altered for the next round of implementation based on the findings.

Use the Evaluation Plan Workbook Section 6: Share & Apply Evaluation Findings on page 13 as a guide to developing a plan to share and apply evaluation results, including the major components of an evaluation report.

Section III Summary & Activity

Section III described the steps necessary to create a robust evaluation plan. Now, consider the following questions about evaluation planning.

- Go to the Health Indicators Warehouse website. Use the website’s filter functions to find five examples of indicators that might be relevant to your plan.

- Think about it: How can preparing the evaluation plan for different interventions create a sense of buy-in on the part of planning group members and coalition partners?

Planning Group Meeting #4: Building Logic Models and Implementation/Evaluation Plans

After the planning group selects appropriate interventions (discussed in Module 4), the group will need to hold another series of meetings when workgroups will build logic models and implementation and evaluation plans for the selected interventions. The planning group may have a professional evaluator, such as a staff person with the state department of health, assist with presenting activities. The main actions to accomplish during the meeting(s) are:

- Introduce logic models and how they are used in implementation and evaluation planning

- Workgroups develop logic models for a specific intervention

- Present logic models to planning group for explanation and Q&A

- Describe implementation plans and how they are used

- Workgroups draft implementation plans for their interventions

- Present implementation plans to planning group for explanation and Q&A.

You and your partners may decide to focus on developing one or two of the interventions during the larger group meetings so that everyone learns how to develop logic models and prepare an implementation plan. In addition, workgroups can meet separately as often as needed to complete their assignments, and bring their work back to the group for review. Workgroup members also can continue to gather input and feedback from their respective organizations.

Click here to download a sample agenda that shows how these steps could fit into a planning group meeting (or meetings).Planning Group Meeting #5: Developing Evaluation Plans

The concepts covered in Module 5 apply directly to your efforts to organize meetings of the planning group to create and implement a state plan.

Once each intervention’s logic model and implementation plans have been developed, the evaluation planning meetings can begin. The planning group may have professional evaluators and staff with the state department of health assist with presenting information and guiding workgroups through their activities. The main actions to accomplish during the meeting(s) are:

- Provide background information on the CDC’s Framework for Program Evaluation and definitions for process and outcome evaluation

- Workgroups develop process and outcome evaluation questions for each intervention

- Workgroups develop indicators for each evaluation question

- Describe methods for data collection, management, and analysis

- Review Evaluation Workbook and Worksheets.

Afterward, the workgroup members can continue to work on their evaluation questions and indicators. Once each workgroup has completed their evaluation plan, an evaluator can make a master evaluation plan and list of instruments and indicators.

Click here to download a sample agenda that shows how these steps could fit into a planning group meeting (or meetings).Summary

Module 5 Summary

Module 5 discussed developing logic models, implementation plans, and evaluation plans with your partners. It described:

- The purpose and main components of logic models and how to link components together to create a complete logic model

- The purpose and basic elements of implementation plans, and the different types of implementation plans

- The purpose of evaluation plans, and the steps necessary to create them

These tools help you and your partners work through the tasks associated with making interventions a reality and measuring their progress toward achieving goals. Development of these tools also engages partners in implementation, provides a clear picture of next steps, and describes how you will document successes and lessons learned. Next, Module 6 will describe how to use these tools to develop goals, strategies, objectives, activities, and targets.

You can download a PDF of helpful resources for more information.

Continue the course with Module 6: Writing Goals, Strategies, Objectives, and Activities.

Module 4

Module 4 Module 7

Module 7